Leave a Comment:

(19) comments

[…] sure you’ve read Understanding Throughput and TCP Windows before watching this video. I mean, you don’t HAVE to, but I recommend […]

ReplyJust getting into your site, very interesting articles. I’m not going to pretend like I understand everything after the first read but it covers topics I have been very interested.

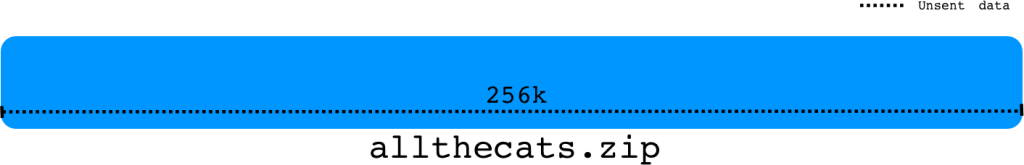

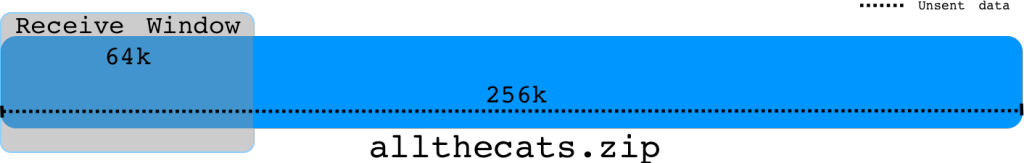

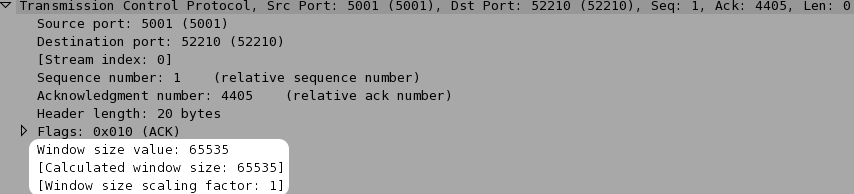

The idea of throughput has always been interesting to me from the practical side. I am interested in determining how to maximize usable throughput for simple things such as data transfer based on which application you use.

The twist is for me, is that I operate in a world where latency can be very high hundreds to thousands of ms and the networks are lossy. These massively variable factors result in systems where calculating “optimal” settings for all cases is pretty much impossible, but reading articles like this help me get a better understanding of what knobs there are available to turn to experiment with.

Thanks again for the article, look forward to reading/watching more of your content.

ReplyKary,

I’ve watched your videos and read your articles. Great job bud. You’ve introduced me to the tcptrace streamgraph that I can’t believe I wasn’t familiar with before reading your website. It’s definitely a great tool within Wireshark. With that said, I’ve seen some patterns lately that have me a bit baffled and was wondering what your opinion is. Here is a picture: http://i.imgur.com/sQ4zVRA.png

This session seems to be hitting the TCP window size ceiling, but if I’m reading the graph correctly, doesn’t this indicate throughput is ABOVE the TCP window size?

Again, great site, and I hope you keep contributing the high quality (and humorous) content.

ReplyI believe I answered my own question. I was looking at this stream graph from the sender side. Duh!

ReplyHi, thank you for a article. I have something that I don’t understand about Bytes in Flight.

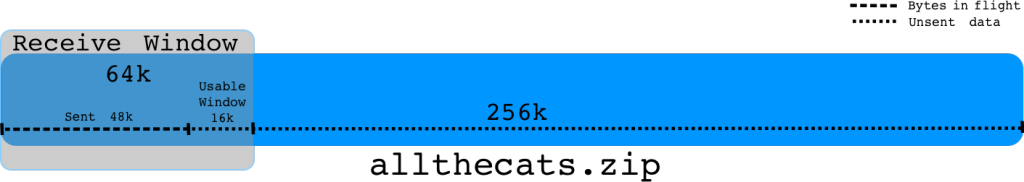

Why bytes in flight limit the number of bytes that can be transferred at “one time and wait for ACK”, I mean why TCP doesn’t send 64KB at one time and wait for ACK, why it sent only 48KB and later sent 16KB before wait for ACK?

Reply[…] Page referenced: Understanding Throughput and TCP Windows […]

Reply“If the bandwidth is 20Mbps and the round trip time (rtt) is 40ms, the BDP is 20000000/8 * .04 = 100k. So 100kB is the maximum amount of data that can be in transit in the network at one time.”

It seems little strange that the more latency have in the network the more byte can be send at one time? For example, the RTT is 1s, then the maximum data can be send is 20000000/8 * 1 = 2500k ?

Reply[…] it took to receive the whole response. The packets of data will have been sent in batches, known as congestion windows, one window at a time, one after the other, with a slight pause at the end of each window for the […]

ReplyWell written! Delightful! I’m gonna rest more of your site as a revision.

Cheers

Reply[…] The actual bandwidth is determined by the TCP window size and the connection latency. See here for more information on TCP window and […]

Reply[…] is calculated using a number of factors such as network protocol, host speed, network path, and TCP buffer space, all of which are affected by the bandwidth available. With Netest, users can use a tool that can […]

ReplyI love the analogy. But the BDP is how many balls I can fit into the pipe times 2. The sender is waiting for an ACKs. The ACK for the first ball takes time to get back to the sender so he needs to keep sending and will be able to send the same number of balls again before the first ACK arrives.

Reply